7. Scaling

In this section you will learn:

what information you need to plan for scaling

Posit Workbench architecture options

Planning to Scale

At some point, you may find that your team’s resource usage outgrows your initial Posit Workbench implementation, and you may start to think about how to scale your current architecture. A variety of options are available to scale out Workbench, including adding servers to a load-balanced cluster or integrating with external resource managers like Kubernetes or Slurm.

The right architecture for scaling is dependent on your team and use case. Before you can decide on the right architecture, it is critical that you understand how your team uses Workbench. Below we’ll cover some of the factors about your team’s usage you need to understand.

How many users are accessing the system concurrently?

The number of users concurrently accessing the system is one of the principal determinants of “load.” For example, if ten users have access to the system, but only one is on the system at any given time, then your load is one. It is good to have an idea of both the average number of concurrent sessions and the peak, as well as how regularly this peak occurs. You may also want to consider what platform adoption has looked like in the past; if you have been steadily adding users over time, you may want to budget resources for expected growth.

The default for R and Python is to use a single thread. If users are not explicitly parallelizing their code, then you can estimate that your environment will need one core per concurrent session.

What are users doing?

Here the biggest concern is RAM and CPU usage.

If users use large data sets, you will need to allocate more RAM accordingly. Potentially, the requirements of large data sets can be reduced if users are able to offload some work to a database or tools like Spark.

From a CPU usage perspective, you want to be sure the environment will not be CPU-bound. While data science sessions in R and Python are single-threaded by default - depending on the packages, code, and skill of the developer - any script could theoretically be written to leverage multi-threading. It is important to confirm if users are parallelizing their code as this can significantly change estimates of CPU needs.

There may be a few places you can get more concrete information here:

You may also have different groups of users to consider, who each behave differently on the system. Specifically, novice users may need more governance to be sure they do not accidentally consume too many resources. On the other hand, some power users need governance so they do not intentionally consume too many resources. This can be managed proactively with User and Group Profiles

How established are workflows?

If your data science workflows are long-established and stable, you may have high confidence in your usage expectations. However, if the team is newer or evolving, the type of work they conduct on the server may vary, and you may want to add more of a buffer.

The answer to this question can also provide an estimate for how frequently system dependencies may need to be managed. The OS system dependency requirements of a team with a long-established workflow may be more stable and require less management - something we’ll consider when we look at architecture options.

What is the expectation of uptime?

This will affect the “buffer” that you build in. It can also determine whether segregating into several nodes is preferable (i.e., if a user can occupy all the resources on a given machine, other users can still access another machine).

Collect this information for your team:

- How many users do you have?

- How many sessions do you expect to be running concurrently?

- What do you expect load to be on average and at peak?

- Do you have historical usage metrics you can review from users?

Architectures for Workbench

When you have a clear picture of your team’s use case and needs, you can start evaluating the best architecture for your team’s Workbench environment.

A single Posit Workbench server is how many teams get started. This architecture is the simplest, without any requirements for external shared storage. If you do not require high availability - just increasing the server size of a single Workbench node and scaling vertically can be a great strategy to scale!

Below we show a decision tree that provides a starting framework for thinking about which architecture best fits your needs. It is very likely that your organization has additional criteria that are critical to making this decision. For example, you may have specific software deployment patterns that you need to follow, like always deploying apps in a container.

In general, we recommend selecting the simplest possible architecture that meets your current and near-term needs, then growing the complexity and scale as needed.

Workbench Cluster

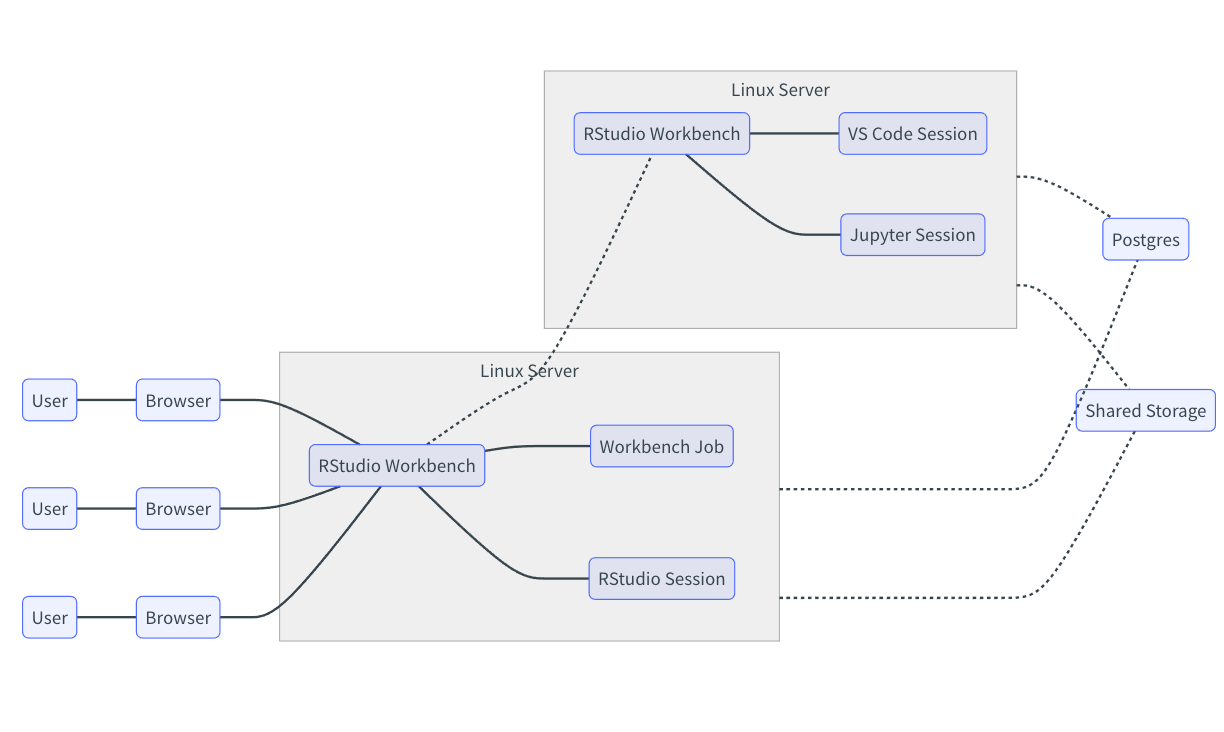

A Posit Workbench cluster has at least two Workbench Nodes, a shared file storage, and a shared PostgreSQL database. Below is an example of a two-node Workbench cluster:

In the diagram, a user accesses one of the nodes via a browser, and then Workbench’s built-in load balancer distributes new sessions between the two nodes using one of the supported balancing methods. With the built-in load balancer, the Workbench cluster is always in an active-active mode.

If you require high availability with failover, you can configure an external load balancer in front of two independent nodes.

Workbench with an external cluster (Slurm or Kubernetes)

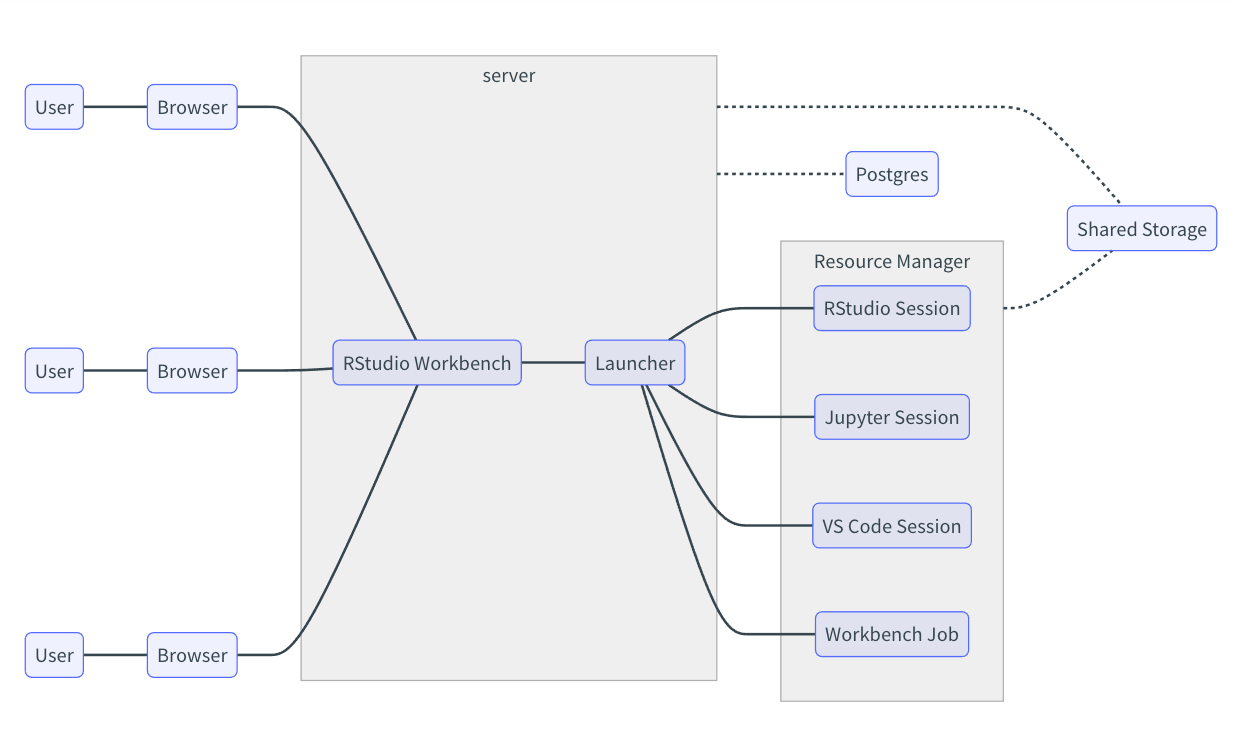

Posit Workbench uses the Launcher feature to start sessions in a Kubernetes and/or Slurm cluster. This architecture requires an external shared file server accessible to the sessions running on the resource manager (i.e., Slurm or Kubernetes) and the Workbench node. It is also recommended to have a Postgres database for session metadata. The diagram below provides an example of this architecture:

Your Workbench environment can be configured so that users can launch jobs to a Slurm cluster or a Kubernetes cluster (i.e., you can have both!)

You can also configure multiple Workbench nodes to have a highly available cluster that also launches jobs into the external resource manager.

Exercise

Using what you know about your team and its use case, consider the architecture that make sense for your team if your data science team were to double.